Deep Reinforcement Learning Breakthrough: Robots Mastering Complex Tasks Autonomously

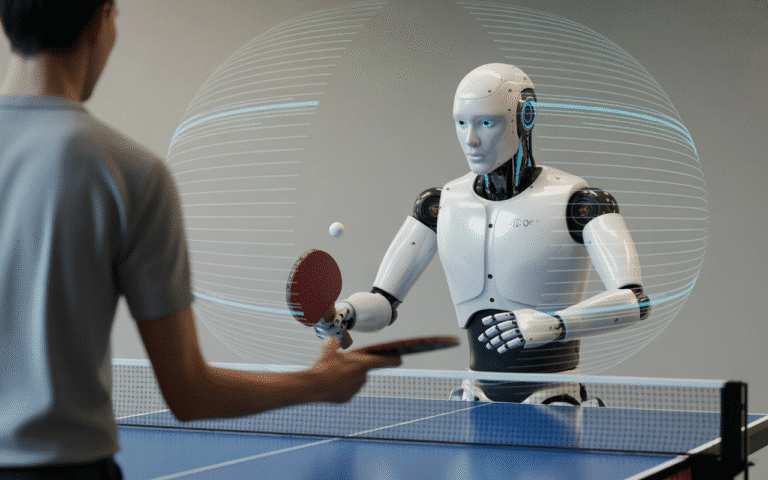

For decades, the dream of truly autonomous robots capable of learning and adapting to complex environments has captivated researchers and the public alike. While significant strides have been made, the challenge of teaching robots intricate tasks without constant human intervention remained a formidable hurdle. Now, a groundbreaking development from Google DeepMind is bringing this vision closer to reality, demonstrating robots that can learn highly complex skills, such as playing table tennis, with remarkable independence.

The Quantum Leap in Robot Learning

The core of this achievement lies in advanced deep reinforcement learning (DRL) techniques, combined with a novel approach to robotic training. Traditional methods often require extensive human programming or supervised demonstrations, limiting a robot’s ability to generalize or adapt to unforeseen circumstances. Google DeepMind’s innovation pivots away from this, empowering robots to learn through trial and error, much like humans do, but at an accelerated pace.

This breakthrough isn’t just about performing a task; it’s about the process of learning. The robots are not explicitly programmed for every move in a game of table tennis. Instead, they are given a goal and learn the optimal strategies and precise motor control required to achieve it, iteratively refining their performance based on feedback from their environment.

Visual Language Models: The Robot’s Self-Correction Mechanism

A key enabler of this unsupervised learning capability is the integration of a visual language model. This sophisticated AI component acts as the robot’s internal evaluator and coach. By observing its own actions and the outcomes, the visual language model can assess performance, identify errors, and generate feedback that guides the robot’s subsequent learning iterations.

Imagine a robot serving a ping-pong ball. If the serve goes out of bounds, the visual language model can process this visual information, understand the ‘error’ in human-interpretable terms (e.g., «the ball went too far to the left»), and use this understanding to adjust the robot’s motor commands for the next attempt. This semantic understanding of its own performance is what truly sets this advancement apart, allowing for continuous self-improvement without a human explicitly telling it what went wrong.

Implications for the Future of Robotics

The implications of robots learning complex tasks autonomously are profound. This technology promises to unlock new levels of versatility and efficiency across various sectors:

- Manufacturing & Logistics: Robots could adapt to new product lines or warehouse layouts with minimal re-programming.

- Exploration & Hazardous Environments: Autonomous robots could perform complex maintenance or exploration tasks in places too dangerous or remote for humans.

- Service & Healthcare: From assisting in surgical procedures to elder care, robots could learn to perform nuanced interactions.

- Education & Research: Accelerating the development of even more sophisticated AI systems by providing real-world learning environments.

This achievement by Google DeepMind signifies a pivotal moment in the journey towards truly intelligent and adaptable robotic systems. It moves us beyond mere automation to a future where robots can independently acquire and master skills, paving the way for unprecedented levels of autonomy and capability.

While challenges remain, the ability of robots to learn complex, nuanced tasks like table tennis without direct human supervision underscores the rapid progress in deep reinforcement learning and AI. We are witnessing the dawn of a new era where robots are not just tools, but intelligent agents capable of learning, adapting, and innovating on their own.